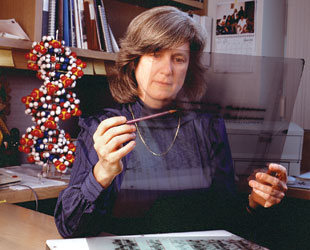

In the early 1970s George Church did his graduate research with sequencing pioneer Walter Gilbert. In 2005, he developed one of the first next-generation sequencing technologies. AP PHOTO / LISA POOLE

In the early 1970s George Church did his graduate research with sequencing pioneer Walter Gilbert. In 2005, he developed one of the first next-generation sequencing technologies. AP PHOTO / LISA POOLE

As you read this article, perhaps on your smartphone or iPad, try to remember what the world was like on October 20, 1986, when the first issue of The Scientist was published.

Apple’s Macintosh computer was just two years old. The polymerase chain reaction (PCR) was celebrating its first birthday, while GenBank, all of four years old, held just under 10,000 sequences (a total of 9.6 million bases). The NCSA Mosaic web browser was still seven years away, and it would be another two years beyond that before J. Craig Venter and colleagues at the Institute for Genomic Research would first be able to read the genetic sequence of a “free-living organism,” Haemophilus influenzae.

In 1986, Leroy Hood...

The Scientist asked several luminaries of the genomics revolution to reflect on how the tools of their trade have changed over the past 25 years, and to look ahead to what the next quarter-century might bring. This is what they said.

THE SEQUENCING VISIONARY

As an undergraduate at Duke University in the mid-1970s, George Church, now a geneticist at Harvard Medical School, keyed in every known nucleic acid sequence, some 8,000 bases in all, mostly tRNAs, and folded them into three-dimensional shapes using software he had written. “I said, ‘Wow, this is a great game,’ ”he recalls. “Why don’t we just sequence everything in sight and fold it up?”

Church was off base on the folding notion. But his sequencing vision proved prescient. Ever since, he says, he has been “obsessed with making sequencing low-enough cost so that everybody could get their sequence and, ideally, share it. “

His journey began as a graduate student at Harvard University under Walter Gilbert at the time that Gilbert and his lab were just beginning to apply the DNA sequencing method that would earn him a Nobel Prize. Church recalls seeing plasmid and gene sequences for the first time—penicillinase and insulin, the lac repressor and interferon. “It seemed like every day there was a new set of very fundamental discoveries, and it was just easy,” he says—so easy, in fact, that he was forbidden to use DNA sequencing as the answer to any question on his grad-school preliminaries. “You’d say, well, I’ll just sequence it. It was like that answered everything.”

By the mid-’80s, Church and his mentor Gilbert were two of a handful of people arguing in favor of a human genome project. Most other biologists deemed the idea either practically impossible or a financial boondoggle. Church felt such a project should initially focus on technical improvements; others, though, lobbied successfully to “just turn the crank”—that is, to sequence everything, and fast. “To me, that was a bit of a disappointment,” he says. “I felt that the real exciting possibility was to bring the price down considerably. But the radical bring-down of price had to wait until victory was declared on the first human genome.”

Victory declared, Church and his team in 2005 published one of the first successful “next-generation” DNA sequencing technologies, using the strategy to decode E. coli in record time. Since then, technology development has exploded, far outpacing Moore’s Law. Gigabase-scale data sets are the norm rather than the exception. Yet even now, says Church, the journey is only beginning. “We’ve got about 1019 base pairs to read before we’ve even scratched the surface,” he says.

Church’s vision for the future is both as simple and as grand as the one he held as an undergrad: to “read and write” biological systems, whether with an ultrafast, handheld DNA sequencer or with an organ “printer” capable of outputting cells and scaffold to form intact body parts. Using a genome-editing technique recently developed in his lab, he proposes to make industrial microorganisms, crops, and perhaps even humans resistant to all viruses. “We have a road map for this as the first ‘killer app’ for genome engineering, which is to change the genetic code genome-wide to something that the viruses don’t expect,” he says.

THE TOOL BUILDER

In June 1986, Leroy Hood, then at Caltech, published his prototype design for an automated DNA sequencer. Codeveloped with scientists at Applied Biosystems, the design included just a single narrow glass tube, or capillary, as the equivalent of four lanes on a standard sequencing gel of the period. Yet it contained all the elements later found in the 96-capillary Applied Biosystems ABI PRISM 3700 that was the workhorse of the Human Genome Project from Cambridge, Massachusetts, to Cambridge, UK.

Those elements, says Hood, were established one afternoon in the spring of 1982. Hood had been laboring to automate DNA sequencing for years, to no avail. At the time, DNA sequencing was a manual affair using radioactive nucleotides and slab gels. A competent technician could produce a few hundred base pairs per run. Hood and Caltech colleagues Lloyd Smith and Mike and Tim Hunkapiller put their heads together and came up with a working concept based on four key criteria: using capillary gel electrophoresis rather than a slab gel; four-color fluorescence; laser detection; and automation-centered engineering. The hardest part, Hood says, was working out the fluorescence chemistry—picking dyes that worked together and coupling them to phosphoramidite building blocks.

The idea the four came up with “turned out to be pretty similar to what we ended up doing,” Hood says, even though the first commercial automated sequencer, the Applied Biosystems 370A, launched in 1986, was actually still slab gel-based. The company wouldn’t release a capillary-based sequencer until 1995, but Hood’s design would eventually chew up bases by the billion for the Human Genome Project.

The idea with these new technologies is they will let us explore new areas of data space, and this in turn will give us new insights into biology and medicine.—Leroy Hood

And those same design principles can still be glimpsed in today’s next-gen sequencers and other omics hardware, he says. “I think [the automated sequencer] opened us up to thinking about the kind of instrumentation that we are developing today for looking at genomic, proteomic, and metabolomic interactions, cell phenotypes, and so forth,” he says. “I think it ushered in the era of high-throughput biology.”

Hood, though, never wanted to only sequence genomes. Seeking to use genomics data as a platform for systems biology, he left Caltech in 1992 to found the cross-disciplinary Department of Molecular Biotechnology at the University of Washington. In 2000, he moved on again to launch the Institute for Systems Biology, also in Seattle. With his eye on the big picture, he cites a laundry list of technologies he anticipates will be utilized over the next quarter century: multiplexed protein analysis and single-cell genomics, high-throughput interactomics in vivo, and better molecular imaging. Now the goal is to mine and integrate these data sets to better understand the big biological picture.

“The idea with these new technologies is they will let us explore new areas of data space, and this in turn will give us new insights into biology and medicine,” he says.

THE GENE SEEKER

Mary-Claire King was a young professor at the University of California, Berkeley, in 1986, looking for the elusive molecular prey that would one day change the lives of many women: a gene for breast cancer.

When King had begun her search in the mid-1970s, few believed such a gene existed. Even as Harold Varmus, J. Michael Bishop, and others added terms like oncogene and tumor suppressor to the genetic lexicon, the conventional wisdom held that a disease like breast cancer had to be viral.

“I would start talks by saying ‘cancer is genetic, it’s always genetic,’ and it was hard for people to accept,” King recalls.

The notion that complex disorders such as cancer, diabetes, and schizophrenia can have underlying genetic roots is a given today. But in 1986, that was still far from firmly established. King, though, was convinced. With a combination of linkage analysis and a healthy dose of math, she scrutinized families in which breast cancer tended to cluster, trying to pinpoint a culprit gene.

It took the better part of two decades, but King ultimately identified a region on chromosome 17q that, in families very frequently struck by breast cancer, was co-inherited with the disease. She named the still-hypothetical gene BRCA1. Four years later, in 1994, after a breakneck search for the actual gene by dozens of teams around the world, researchers in Utah crossed the finish line first.

These days, classical gene finding has evolved. King has upgraded her toolkit to include exome and whole-genome sequencing, searching for affected genes in breast cancer, hearing loss, schizophrenia, and autoimmunity. With a sequencer and a good family tree, she acknowledges, the work that took her some 17 years to accomplish back then could be finished today in a few months.

“The fundamental questions of genetics haven’t really changed; what’s changed is our capacity to answer them. We’re now in a position to be able to answer these questions with technology that simply didn’t exist before. But in a way, this technology is wasted on us. This technology should have been in the hands of Darwin and Mendel and much smarter people than any of us. But we’re the ones that have it, and so we can now go back and answer their questions.”

She can envision new questions and tools, too—such as the ability to collect and integrate disparate information from genomic, proteomic, metabolomic, and imaging data sets, “so that we can think across dimensions in an organism simultaneously.”

Suppose, for instance, that one could image the brain of a human and those of other primates and lower mammals, and understand—at the level of morphology, of the proteome, of the transcriptome, and of the genome—how they differ. Such integration could fundamentally change the way researchers approach questions in evolutionary and developmental biology. “We are quite close to being able to do each of those first steps,” King says, “but putting them together is not something that’s doable yet.”

In the next quarter century, though, it could be, as could other genetics-inspired advances in health care diagnostics, monitoring, and treatment. Genetics is only 150 years old, King notes, and her career spans about a quarter of that time. Genomics is younger still. “I feel unbelievably fortunate to have fallen into this field when I was young,” she says. “And I’m luckiest of all to have fallen into genetics at a time when the questions were being articulated very well and the technology was being developed, and now explosively developed, to a point that now we can all exploit it.”

“I hope to continue to do it for decades,” she says.

Interested in reading more?